Autonomous Fruit Picking Robot

This graduation project was supervised by University of Lincoln, UK and Ainshams University, Egypt. The main target of the project is to design and build an autonomous platform suitable for doing multiple agricultural tasks. This project aims on designing the platform itself and the first module of this platform which is an autonomous fruit picking module based on a 6-DoF robotic arm. The main requirements for this robot were:

- The robot should be able to navigate autonomously in an outdoor environment. Therefore, it must be able to localize itself within its working enviroment and detect static and dynamic obstacles and avoid them

- The robot should build a map for the environment for the first time it operates in and then be able to localize itself in it.

- The robot will have to patrol through some waypoints in the map to check for mature fruit.

- The robot needs to detect mature fruit once it is in its field of view, localize it, and navigative towards it and pick it.

The robot's first design was inspired from SAGA's robot throvald. As a result, I worked on a package to drive the four independently steerable wheels of the robot, which then evolved into Swerve Steering Package.

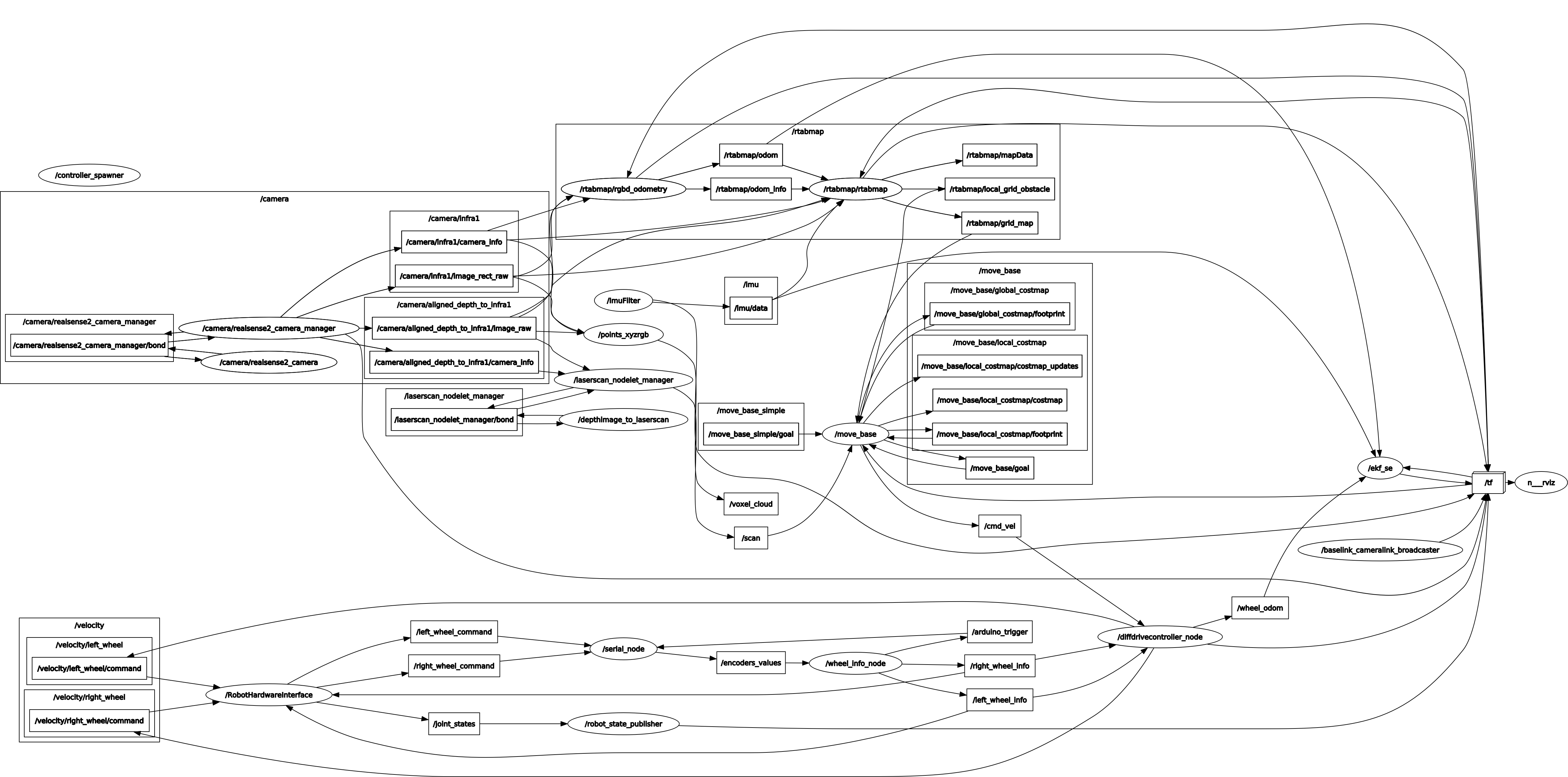

The robot has two D435i cameras, two wheel encoders, and six joint encoders on the robotic arm. Robot's localization depended heavily on the D435i camera. So, some modifications were made to the camera's parameters (D435i localization package). Moreover, the robot had to patrol through some waypoints as mentioned in the requirements, and the move_base_sequence package was made for it.

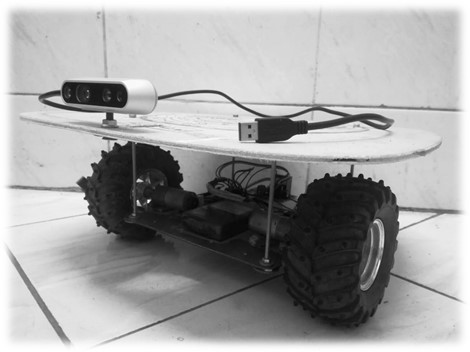

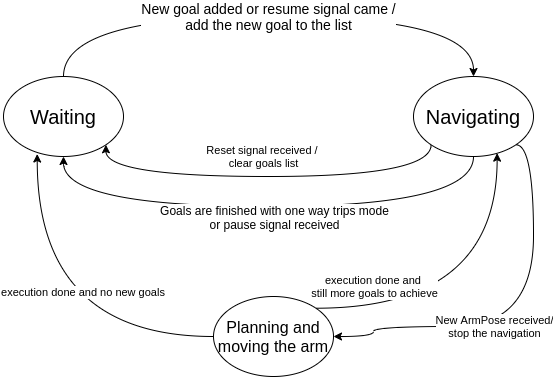

For the strawberry detection, we used YOLACT algorithm. The robot behaviour was simple; it keeps patrolling through a registered array of waypoints, a pause service is called once it localizes a mature fruit. Then, it plans a trajectory for the robotic arm to pick the fruit, picks it, and a resume service is then called to continue patrolling. Due to the pandemic and the lockdown, manufacturing the platform was a challenge. We had a small test platform where we tested all the algorithms. The following figures shows the test platform, some figures of the progress we could make in manufacturing the platform, the rqt_graph on the robot and the behaviour diagram of the robot. A very quick demo made during testing the RGB strawberry instace segmentation. The video shows that we only detected mature fruit which is suitable for picking. After that, the grasping point was calculated using the depth image.

This video shows navigation with the test platform (D435i Localization was used)

This video shows the robotic platform in simulation.

The last video shows when the robot localizes an object, stops, and moves the arm to grasp it. Grasping was not simulated (fruit should be handled with a soft gripper).